TalenTrack

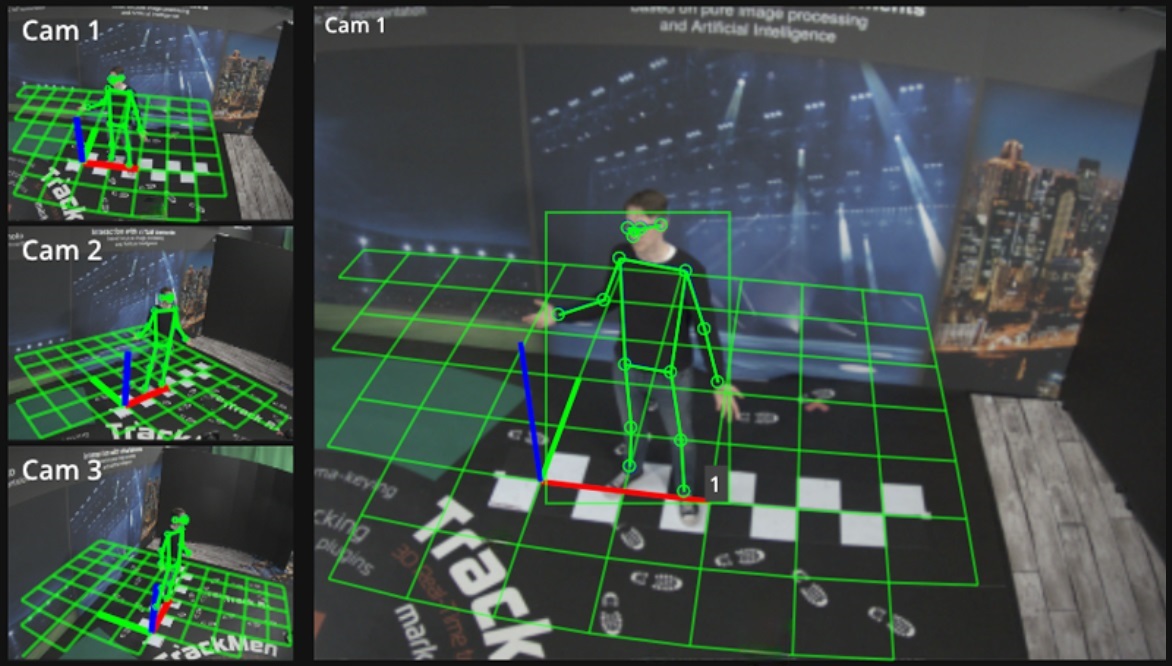

TalenTrack is a markerless 3D body pose tracking system solely based on image analysis through deep learning algorithms. It requires no markers, senders or other equipment to be carried by the tracked persons and no calibration of the individual bodies. Tracking is done in real-time by a number of cameras analyzing overlapping fields of view and identifying persons in the video, recognizing the human body and estimating the positions of their joints. The positions of these joints are then located in 3D space and sent to a graphics engine.

SetupHardware, Software, Camera Settings |

CalibrationCamera Calibration, Coordinate System, Masking |

Using TalenTrackTracking Persons, Data Sending, Delay, Troubleshooting |

System Specifications

Number of persons tracked:

Up to 4 persons

Base area of tracking:

For 3 cameras: 5x3 meters

Can be extended by using more than 3 cameras (to be determined with the help of Pixotope staff)

Tracked body parts (position of main joints / nucleus of):

Arms: shoulder, elbow, wrist

Legs: hip, knee, ankle

Torso: pelvis, hip center

Head: general position

Tracking data format (UDP stream to one or multiple graphics engines):

Proprietary TalenTrack format sends all joints in one stream

TrackMen camera tracking format sends one single joint per sender

FreeD format sends one single joint per sender

Tracking data delay (depends on engine components and hardware setup):

approx. 1 frame if each TalenTrack camera has an own engine

approx. 3 frames if 3 TalenTrack cameras are used per engine